I recently was able to finally play Quantic Dream's Heavy Rain (my original PS3 quit reading Blu-rays, grrrr) and was blown away with the first few hours of gameplay. They only complaint I have so far is with the dialogue in a certain scene in which one of the main characters loses his son in a mall. You know what I'm talking about. If not, just listen to the first minute or so (after that there are spoilers.)

For a pretty dramatic and frantic scene, which is achieved wonderfully through the visuals, I was laughing at Ethan yelling "JASON" over and over again partly because of the awkward accent, but mainly because there was only 2-3 audio variations of him yelling when there should have been 10-16. Oopsies. Because of it's goofiness, fans have made hilarious YouTube videos parodying this scene. More variance within the dialogue could easily have increased the intensity and weight to such an emotionally stirring scene as this.

One of last year's games I was most impressed with aurally was Martin Stig Andersen's work for Playdead's Limbo. Not only did the audio pull the player deeper into the world, everything also felt very organic, never once making the player question what they were experiencing. One of the ways he was able to achieve this was using simple variations and randomness for movement, specifically through footsteps.

One of last year's games I was most impressed with aurally was Martin Stig Andersen's work for Playdead's Limbo. Not only did the audio pull the player deeper into the world, everything also felt very organic, never once making the player question what they were experiencing. One of the ways he was able to achieve this was using simple variations and randomness for movement, specifically through footsteps.

"Sound comes to us over time. You don't get a snapshot of sound. Therefore, what you notice with sound, the essential building block, is change." - Gary Rydstrom

One of last year's games I was most impressed with aurally was Martin Stig Andersen's work for Playdead's Limbo. Not only did the audio pull the player deeper into the world, everything also felt very organic, never once making the player question what they were experiencing. One of the ways he was able to achieve this was using simple variations and randomness for movement, specifically through footsteps.

One of last year's games I was most impressed with aurally was Martin Stig Andersen's work for Playdead's Limbo. Not only did the audio pull the player deeper into the world, everything also felt very organic, never once making the player question what they were experiencing. One of the ways he was able to achieve this was using simple variations and randomness for movement, specifically through footsteps.Footsteps are easily something average players may gloss over while playing, which really is a good thing. That means the sound designer has focused your attention to the action on screen, heightening the experience. A number of games have achieved this through various ways. Ubisoft's Assassin's Creed series goes the ultra realistic approach by incorporating cloth and equipment to the player's footstep movement. EA's Mirror's Edge takes a more stylistic approach to match it's unique presentation and setting. What tricks us into believing what we're listening to is really happening is the varying and random quality to the movement.

The standard practice of randomizing sounds is to have 10-16 variations of a footstep to cycle through, though it's up to the sound designer how much variation is enough. We can go a step further by adding multiple groups of varying sounds for cloth movement, equipment jingles, heavy breathing, etc. But instead of having 10 files with different materials in them, we can break those materials into different groups, making 40+ variations. Let's see how we can perform this basic task by using Epic's Unreal Engine 3 and Audiokinetic's Wwise.

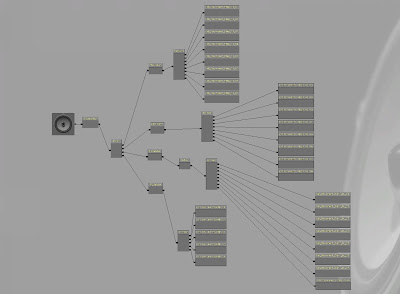

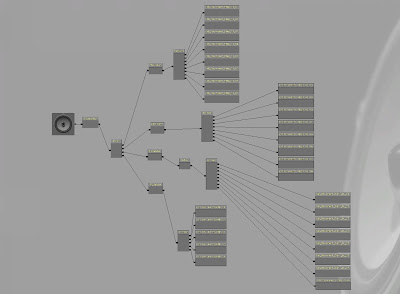

Using the powerful out-of-the-box sound implementation in Unreal, we can easily make a sound cue to randomize though sound files. After importing all the sounds associated with the footstep movement, create a new sound cue and add the 'Attenuation' and 'Mixer' nodes.

For this example, I'm using four different sets of sounds for this character's movement - footstep, cloth, metal jingles, and breath/panting. Each group is connected to their individual 'Random' and 'Modulator,' which together randomizes pitch and volume of each audio file. These are then connected to the 'Mixer' node. I've added a slight delay to the cloth to bring out the sound of the leg movement after stepping. After everything is hooked up, press play a few times and test your sweet sound.

From here, the fun begins when you get to adjust your mix levels and attenuation numbers so everything sounds balanced after tagging cues to animations and while playing in-game. UE3 does a great job with its sound cue node configuration for the visual learners out there, along with it's complex, yet easily understood kismet editor.

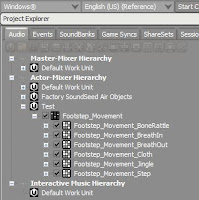

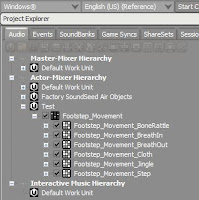

While using Wwise's advanced audio pipeline solution, we are also able to achieve the same results along with its added ease and perks. Using the same files as before, import the audio into the "Actor-Mixer Hierarchy." Next, group each set of sounds into Random Containers, naming each container its respective group (i.e. cloth, jingles, etc.) Then shift-select all the containers and right-click to a "New Parent" - "Actor-Mixer," which is named "Footstep_Movement" in my example.

Shift-select all Random Containers again and right-click "New Event (Single Event for all Objects)" and select "Play." This automatically takes all the sounds we need and neatly adds them to our single New Event, which we've named "Play_Footstep_Movement." Events are what Wwise uses to trigger the audio in video games.

From here we can begin mixing levels to suit the sound we want in-game by making adjustments in the Event and Contents Editor. Pretty simple! To add the delay we had in our UE3 example, simply adjust the delay action property within the Event Editor.

I've only skimmed the surface of a vast ocean of study and knowledge on footstep and movement sounds. Definitely check out Damian Kastbauer's in-depth analysis on footstep sounds in video games including Prince of Persia, Mass Effect, and Fallout 3. I was able to watch a presentation of this study at this past year's GDC and definitely recommend reading it!

Next time I hear someone yell "JASON" in public, I'll try to hold back my laughter. ;)